Member-only story

6 Ways ChatGPT Can And Probably Will Destroy Our Future

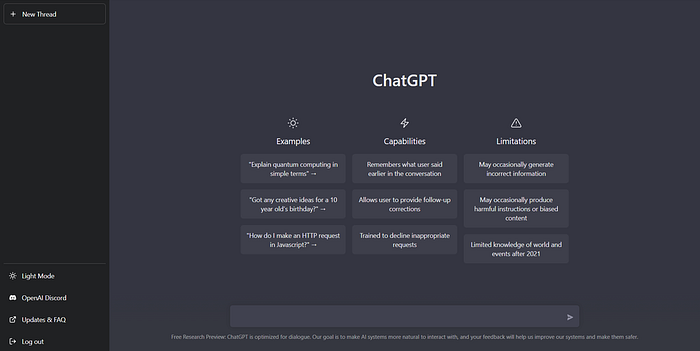

The most talked about AI app right now is ChatGPT. If you haven’t heard of it, OpenAI’s GPT-3 is a highly advanced generative AI chatbot that uses a very broad language model (LLM). Simply defined, this is computer software that can “speak” to us in a way that is very comparable to how we would communicate with a real person.

ChatGPT’s impressive versatility has stoked widespread curiosity about the range and limits of artificial intelligence. Already, there is much conjecture about how it will affect a vast array of human occupations, from customer service to programming. Here are six ways in which the extraordinarily advanced AI threatens our future:

1. Drastic Increase in Efficient Cybercrime

Models like Chat GPT have made it simpler for fraudsters to send very clever yet evasive emails. Attackers have a wide variety of cyber tools at their disposal and can use them to achieve several goals, unlike in older times. For example, more convincing emails would allow for more complex phishing assaults.

If the bot is used to automate phishing assaults, including sending out emails and gathering personal information, it raises significant security issues. Because of this, there can be monetary losses and serious confusion over who is to blame.

Only a few short weeks after its first release, Israeli cybersecurity firm Check Point showed how the web-based chatbot, when combined with OpenAI’s code-writing system Codex, could generate a phishing email that might contain a dangerous payload.

Sergey Shykevich, manager of Check Point’s threat intelligence group, told TechCrunch that this kind of application demonstrates ChatGPT’s “potential to significantly alter the cyber threat landscape” and is,

“another step forward in the dangerous evolution of increasingly sophisticated and effective cyber capabilities.”

Additionally, Check Point has raised warning bells about the chatbot’s potential to aid attackers in developing harmful software. The investigators claim to have seen at least three verified instances of hackers…